AI in software testing: real capabilities, real limits

Artificial intelligence is already embedded in modern software testing, from generating test cases and stabilizing UI automation to prioritizing tests in CI/CD pipelines. At the same time, bold claims about autonomous AI testers and self-directed test agents continue to outpace reality.

This article offers a practical, evidence-based overview of AI in software testing: what works in production today, what’s emerging from research, and what remains hype. Drawing on industry tools, academic findings, and real QA workflows, it helps testing teams understand where AI genuinely improves quality, and where human judgement is still irreplaceable.

Introduction: world’s first fully autonomous AI software engineer

In 2024, a product called Devin AI, was introduced by Cognition Labs as the “world’s first fully autonomous AI software engineer”. The authors claimed that Devin could independently plan, code, debug, and deploy entire applications based on natural language prompts — essentially, perform typical developer tasks. This announcement gained significant media attention, suggesting a future where AI could fully replace human software developers.

However, upon closer examination, it became evident that Devin’s capabilities were overstated. While the AI demonstrated some ability to generate code snippets, it struggled with even basic tasks, often producing errors or requiring substantial human intervention. Critics labeled it as “publicity hype”, highlighting the dangers of overstating AI’s current capabilities. What’s even funnier is that Devin job openings still list “software engineer” positions, proving that the product can’t do what it claims to be able to.

In software testing, particularly in test automation, there’s much marketing hype now too. Certainly, some AI-powered capabilities are already in production use today and deliver real value. Others are being explored in academic and industry research, with early results but limited adoption. And some widely spread ideas, like AI doing exploratory testing on its own — remain only in the imagination and pure speculation.

In this whitepaper, I’m going to explore the landscape of AI use in testing automation, covering what’s working, what’s emerging, and what’s still hype.

What’s working in AI Test Automation now

AI in software testing isn’t new, it has decades of research. Papers on defect prediction, test generation, and test suite optimization have been published as early as the 1970s. What’s changed is the speed at which research ideas now move into tools: AI research often makes its way into practice almost instantly, sometimes before it’s even formally peer-reviewed.

That said, not every research breakthrough leads to usable technology. Many techniques that perform well in lab conditions fall short in real-world QA environments, especially when data quality is poor or project constraints are messy. Fortunately, critical evaluations are published just as frequently as optimistic results, which makes it easier to cut through the noise.

This section focuses on what’s currently working in production: AI applications that have been widely adopted, deliver measurable value, and operate reliably across different teams and contexts.

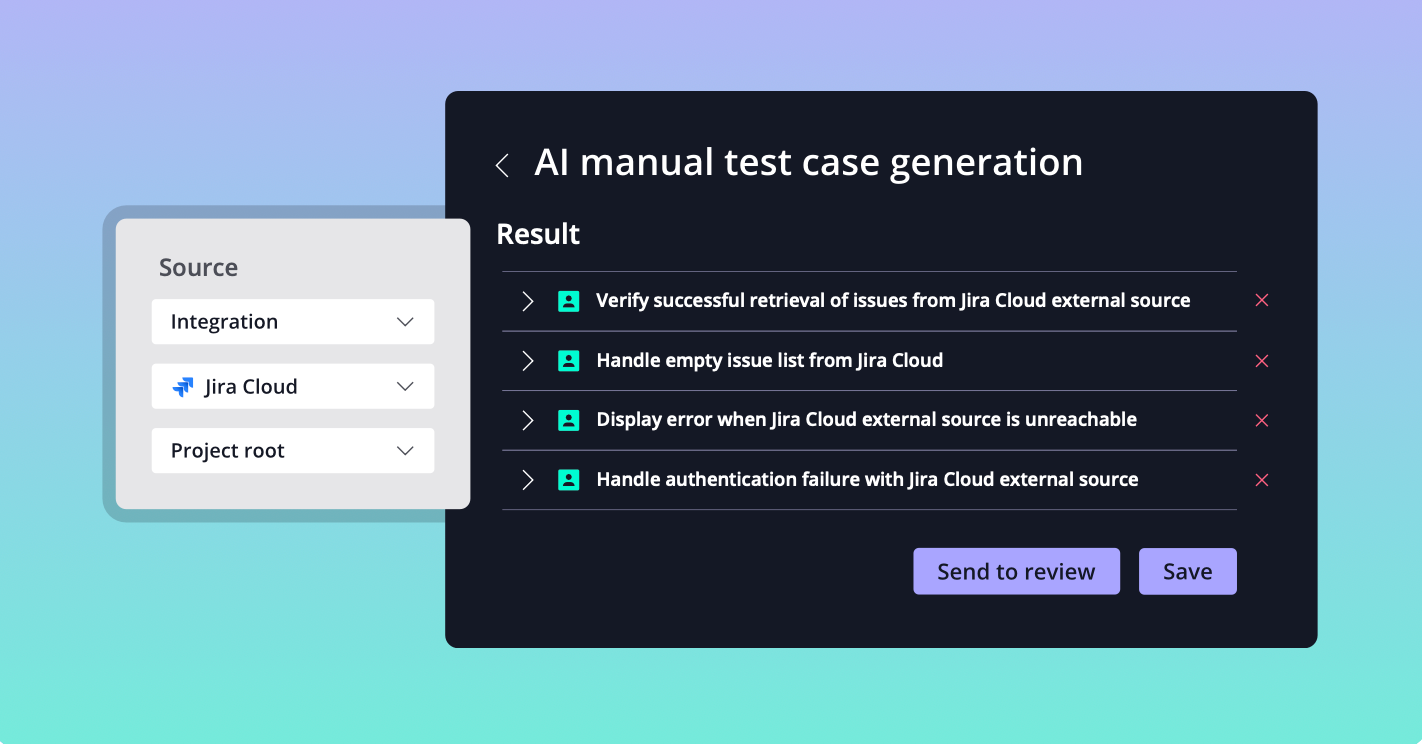

Test case generation from requirements

One of the most widely adopted and reliable uses of AI in test automation today is generating test cases from requirements written in natural language. Tools that support this typically use off-the-shelf large language models (LLMs), custom NLP pipelines, and often add custom fine-tuned prompts to transform structured specifications (user stories, acceptance criteria, or functional descriptions) into structured manual test cases.

Read more:

This is especially effective when requirements are well-structured and “deterministic”; while review is still needed, it accelerates documentation and reduces oversight.

Additionally, some tools (our AIDEN, for example) assist in expanding coverage by suggesting edge and negative test cases. These might include scenarios like leaving a password field empty, entering malformed input, or combining actions in non-obvious ways. This helps diversify the test suite without requiring testers to brainstorm every corner case themselves.

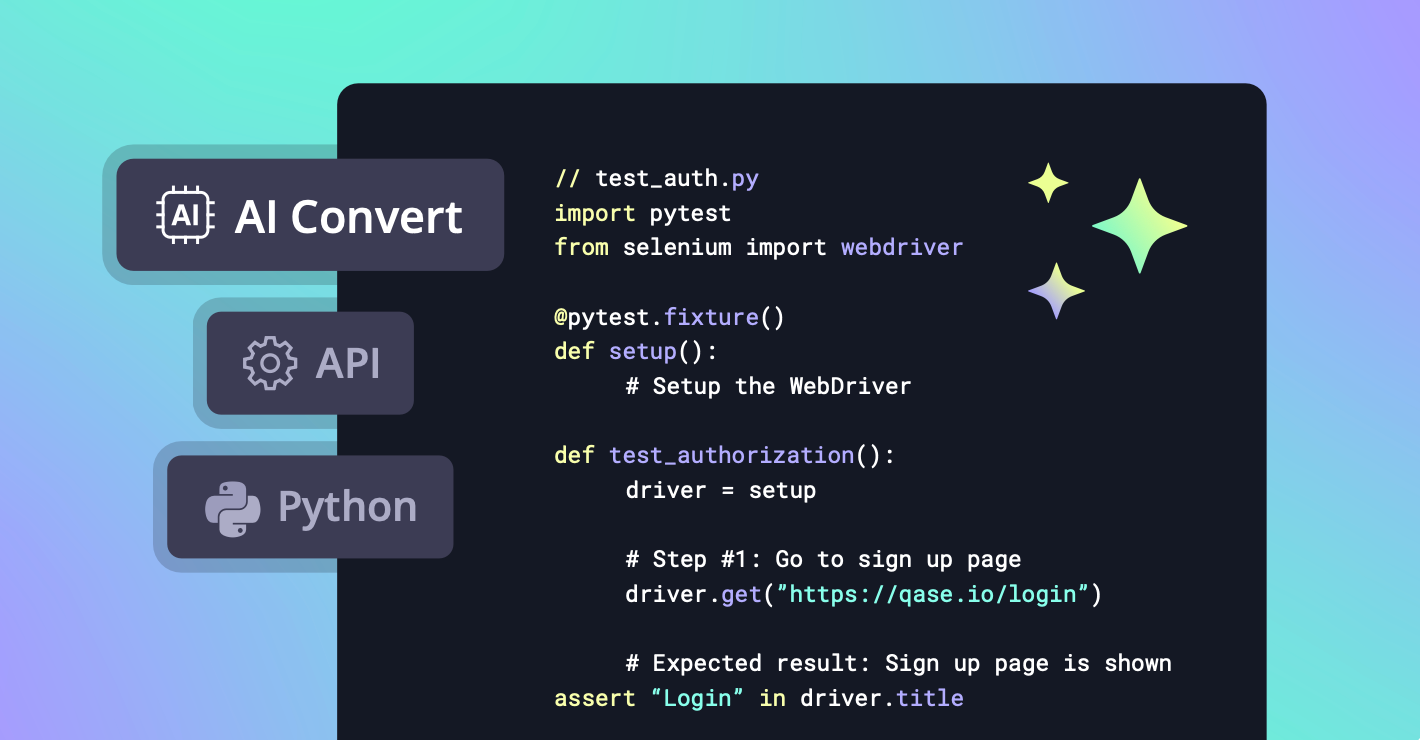

AI-assisted test case conversion (manual to automation)

Another practical application of AI in testing is converting existing manual test cases into automated ones. This works best when the manual cases are clearly structured, follow repeatable steps, and describe deterministic behaviour. In such cases, AI can interpret the logic, translate the steps into executable scripts, and output runnable tests, often without requiring the tester to write any code.

While human review is still important, especially for risky scenarios and complex flows, this kind of assisted conversion helps teams scale automation without scaling headcount, and without getting bogged down in boilerplate scripting.

Self-healing locators in UI automation

In UI automation, one of the most common sources of test failures is changes to element identifiers: button IDs, class names, or other structure changes within the DOM. Self-healing locators aim to reduce this brittleness by automatically detecting and adapting to such changes during test execution.

This feature is now production-ready and supported in many commercial tools. It works by using heuristics or lightweight machine learning to match a missing or broken element to a likely replacement based on other attributes, e.g. visible text, position, tag structure, or role.

If a match is confidently found, the test proceeds without failure.

This can significantly reduce test flakiness and maintenance overhead in regression suites, especially when changes to the UI are cosmetic or involve non-functional restructuring.

Self-healing isn’t a silver bullet. First, it works best when changes are minor and the UI context is stable. It can introduce false positives if the wrong element is matched and the test appears to pass.

Most mature teams use it alongside good locator strategies, alerts, or human-in-the-loop review for critical paths. As with many AI-driven features in testing, the value lies in reducing noise and repetitive rework, not in eliminating human oversight.

Log anomaly detection and signal extraction

Modern systems generate massive logs during testing, deployment, and production. Sorting through this data is time-consuming and often delays debugging. AI can help by detecting anomalies and surfacing meaningful signals.

In QA contexts, this helps spot flaky patterns, surface silent regressions, and speed up triage. While not “testing AI” per se, it’s one of the most practical ML applications for debugging complex systems, especially when logs are integrated into test pipelines.

This is already standard in observability platforms like: Elastic, Datadog, Splunk These tools use unsupervised learning and statistical techniques to flag deviations such as new errors, failure spikes, or behaviour shifts.

Read more:

Test selection and prioritisation in CI/CD

Running all tests on every commit is often inefficient in large systems with big regression suites. AI can help by selecting and prioritising tests based on their likelihood to catch new issues.

Tools like Launchable use ML and heuristics to analyse historical failures, coverage, and code changes, identifying the most relevant tests per commit. High-risk tests run first; low-risk ones are deferred or skipped.

This approach can significantly reduce CI time and improve feedback loops, but only if teams have clean historical data and tagging. Without that, predictions can be noisy. For teams with good infrastructure, the payoff is substantial. For others, setup and data quality remain key showstoppers.

Draft generation for test plans and documentation

AI tools powered by large language models can scaffold first drafts of QA documents like test plans, bug reports, and checklists. This reduces the friction of starting from scratch, especially under time pressure.

For example, a tester might prompt the tool to draft a regression checklist or a release test plan based on feature scope. Human review remains essential, but AI speeds up the writing process, helping teams focus on content, not formatting.

Like with code generation, the quality of input and review makes all the difference. The value here lies in speeding up the writing process, not in replacing critical thinking.

Read more:

What’s emerging in research and experiments

Read more:

Tools such as mabl, Testim, and Functionize, support converting structured manual test cases into automation scripts using AI or natural language processing. AIDEN in Qase takes this a step further by pre-executing each step during conversion. This helps catch ambiguous instructions or invalid assumptions early, ensuring that the resulting automation is not only syntactically correct, but functionally valid in the target environment.

Defect Prediction Using Historical Data

Defect prediction involves using historical software data to identify components of a system that are likely to contain defects in the future. This approach aims to optimize testing efforts and improve software quality by focusing resources on the most error-prone areas.

Despite promising results in research, defect prediction using historical data remains difficult to adopt in real-world settings.

Key Challenge: Data quality: prediction models rely on accurate, consistent records of past defects and code changes, but many projects lack the necessary tagging discipline or historical depth. Even when data is available, models often don’t generalise well — what works for one team or codebase may perform poorly on another due to differing architectures, coding practices, or defect definitions.

Another issue is interpretability. More advanced models, especially deep learning-based ones, can act like black boxes, offering predictions without clear reasoning. This undermines trust and makes it harder for teams to take action based on the output. Finally, integrating these tools into existing workflows isn’t straightforward. Development and QA teams often need to adapt their processes and tooling to benefit from predictive models — and that can be a tough sell when the models themselves still show inconsistent reliability.

Read more:

Test Suite Optimization and Reduction

As software systems evolve, their test suites often grow extensively, leading to increased execution times and maintenance overhead. Test suite optimization and reduction techniques strive to address this by identifying and eliminating redundant or low-impact test cases, ensuring that the most critical tests are prioritized.

Despite a growing body of research, test suite optimisation and reduction remain difficult to apply reliably in real-world projects.

Key Challenge: Data quality: effective optimisation requires detailed test execution histories, failure logs, and accurate code coverage, i.e. data which many teams simply don’t collect or maintain consistently. Even when data is available, optimisation models often don’t transfer well across projects. Techniques that work in one codebase may be ineffective in another, due to differences in architecture, test strategy, or how failures manifest.

Another concern is the risk of over-reduction: models might classify some test cases as redundant, even though they catch rare or edge-case bugs. This can lead to reduced fault detection, especially if the optimisation process isn’t well validated. Finally, integration adds friction. Teams need to adjust existing workflows and tools to take advantage of optimisation algorithms, and the return on investment isn’t always obvious, particularly if test suites aren’t huge to begin with.

Read more:

ML-powered visual testing

Visual regression testing focuses on identifying unintended visual changes in a user interface (UI) after code modifications. Traditional methods often involve pixel-by-pixel comparisons, which can be sensitive to minor, non-critical differences, leading to false positives. Machine learning (ML) introduces a more intelligent approach by understanding the context and significance of visual changes.

Despite the growing interest and promising research, ML-powered visual testing still faces several practical limitations.

Key Challenge: Prevalence of false positives: models may flag harmless or acceptable changes, such as font rendering differences or minor layout shifts, as regressions. This forces teams to manually inspect many issues, which undermines the promised efficiency gains.

Read more:

Another limitation is the need for diverse and representative training data. To distinguish meaningful changes from noise, models must be exposed to a wide variety of UIs, resolutions, themes, and languages. Gathering this data at scale is difficult. Integration also remains a barrier: these tools often require additional infrastructure or changes in test pipelines, making adoption harder for teams with limited time or tooling flexibility.

Finally, computational cost can become an issue. Image-based testing with ML adds processing overhead, particularly in CI pipelines where speed is critical. As a result, while ML-powered visual testing is promising (especially for responsive UIs and localisation), it still requires careful human oversight and isn’t a drop-in replacement for conventional UI testing practices.

Synthetic Test Data Generation

Synthetic test data generation involves creating artificial datasets that mimic the structure and statistical properties of real-world data. This approach is particularly valuable in scenarios where using actual data is impractical due to privacy regulations or the unavailability of comprehensive datasets.

While synthetic test data generation is becoming increasingly valuable, particularly in regulated environments, it still faces real limitations.

Key Challenge: Chief among them is realism: synthetic datasets rarely capture the messy, unpredictable nature of real-world inputs. Edge cases, unexpected combinations, and failure-triggering behaviours are hard to simulate without grounding the model in real usage data. Another key issue is diversity. Even when using LLMs or generative models, synthetic data tends to reflect average or “safe” cases unless explicitly guided, which limits its effectiveness in robustness testing. )

That said, among the newer AI-driven testing techniques, synthetic data generation is relatively mature. Not because it’s fully solved, but because it’s practically useful right now. Tools exist, frameworks are evolving, and in many contexts, especially where privacy matters, synthetic data can unblock workflows that would otherwise stall. The most effective strategy remains combining synthetic and real data, using the former to scale coverage and the latter to anchor tests in lived complexity.

LLM-powered test log summarisation and triage

As software systems age and grow, the volume and diversity of logs generated during testing and operation grows exponentially. Analyzing these logs to identify issues, anomalies, or areas requiring attention sometimes gets impossible to do manually. LLMs offer a promising solution by automating the summarization and triage of test logs, thereby accelerating the debugging and quality assurance processes.

Though the research shows the use of LLMs for this particular purpose is very promising, it’s still limited in several important ways.

First, the usefulness of the output depends heavily on how prompts are written. Minor changes in phrasing can lead to vastly different results, making the process brittle unless teams invest time in designing and refining prompt strategies.

Second, logs are notoriously domain-specific, filled with system-specific jargon, unique error codes, or custom formats. General-purpose LLMs often struggle to make sense of this context unless they are fine-tuned on in-domain data, which many teams don’t have the resources to do.

Third, performance can be a concern. Running LLMs on large volumes of logs, especially

in real-time, can be computationally expensive, making it difficult to scale for high-throughput environments. Lastly, integrating these tools into existing pipelines or observability stacks requires effort and customisation, particularly if teams want to maintain traceability or auditability in the output. For now, LLMs can help testers and SREs save time on log interpretation, but their outputs still require careful review and validation to avoid missing or misinterpreting critical issues.

AI-Assisted Exploratory Test Agents at Scale

Exploratory testing depends on human intuition and domain knowledge. Testers learn as they interact with a system, uncovering usability issues, edge cases, and unexpected behaviours. AI-assisted agents aim to scale parts of this process by running semi-structured flows (often designed by humans) across many devices or environments, introducing small variations or stochastic behaviours to simulate different user paths.

Read more:

In practice, this means AI can repeat exploratory-like interactions across device farms, operating systems, or configurations, effectively scaling what would otherwise be time-consuming manual effort. This is particularly useful in mobile and embedded environments, where device diversity makes full manual exploration expensive. Some teams already use this approach to complement human testers, helping uncover environment-specific bugs and validating system behaviour under varied conditions.

However, this technique comes with important limitations. First, AI agents lack contextual understanding and intent. They can simulate interactions, but they don’t reason about why a flow matters or what the user is trying to achieve. As a result, they miss high-level issues like confusing interfaces, poor feedback, or accessibility gaps — the kinds of insights human testers uncover naturally.

Second, maintaining agent frameworks at scale is complex. Scripting safe randomness, controlling application state, and debugging intermittent behaviours require ongoing engineering effort. Integration is another challenge: running these agents in CI, capturing meaningful data, and incorporating findings into existing workflows demands robust infrastructure and team commitment.

Still, for teams already operating at scale, particularly in device-heavy contexts, AI-assisted exploratory agents can expand behavioural coverage and help identify issues that scripted tests might miss.

This idea was discussed in a webinar between Vitaly and Alexey Shagraev (CEO of lovi.care), where they explored how AI agents could complement crowdsourced testers by running exploratory sessions at scale, increasing repeatability while capturing behavioural diversity across real-world devices.

What’s still speculative or hyped

Not everything promoted under the banner of AI in testing is usable today, or even realistic. Some ideas have strong conceptual appeal but fall apart under scrutiny due to technical limitations, lack of scalability, or misaligned incentives. Others are backed by early-stage research that’s still years away from being production-ready.

Read more:

Autonomous Black-Box Test Generation from User Behaviour

Automatically generating test cases from user interactions is an interesting idea. It promises to reduce manual effort and improve coverage by learning from real behaviour. But in practice, it remains largely out of reach.

The core issue is a lack of intent understanding. These systems can replicate click paths or navigation patterns, but they don’t understand why users act the way they do. As a result, they often generate tests that execute without errors but validate nothing meaningful. Worse, because most behavioural data reflects common usage, the models tend to overfit to happy paths, precisely the flows least likely to reveal serious bugs.

Even when test cases are generated, the signal-to-noise ratio is poor. Outputs are often redundant, flaky, or misaligned with testing goals, requiring heavy manual review and cleanup. Deploying these systems at all demands significant infrastructure: telemetry pipelines,

data cleaning, and orchestration layers that many teams simply don’t have. And even then, generalisation is a problem. What works on one app or platform often fails elsewhere without substantial fine-tuning.

In short, while the concept is attractive, current implementations are brittle, narrow in scope, and costly to maintain — far from the plug-and-play vision often sold in marketing.

Generalised test case prioritisation via ML

As test suites grow, running every test on every commit becomes impractical. Test case prioritisation (TCP) aims to order test execution so that the most valuable or failure-prone tests run first. Machine learning offers a promising way to automate this by learning patterns from historical test data.

But while the idea is well-represented in academic literature, real-world adoption remains rare. One major barrier is data dependency: effective prioritisation models must be trained on internal project artefacts such as historical test outcomes, coverage maps, failure logs, and commit histories. Off-the-shelf LLMs are not suitable, since they lack access to this data and are trained on a different dataset. Retraining is also not a one-time task: as the codebase evolves, so must the model. That makes TCP a moving target, where retraining becomes an ongoing cost in both compute and engineering time.

Interpretability is another concern. Deep learning models can recommend which tests to run, but often can’t explain why. In quality-critical environments like finance or medical systems, this opacity makes the approach hard to trust or justify.

Finally, infrastructure requirements are non-trivial. Productionising these models demands robust pipelines, historical data hygiene, and ML engineering expertise — resources many QA teams simply don’t have. As a result, ML-based TCP remains an appealing idea, but one that hasn’t yet crossed the gap from research to routine use.

Self-Directed Exploratory Testing Agents

Some of the most ambitious claims in AI testing involve autonomous agents that can explore applications like human testers, clicking through interfaces, discovering edge cases, and identifying unexpected behaviours without explicit scripts or scenarios. While this idea has gained traction in marketing materials, there’s no real practical application so far.

The research is ongoing but not production-ready. In one of the recent papers [1], scientists proposed new methods to help large models like GPT-4o perform goal-directed exploration. While the paper reports encouraging results (up to 30% improvement on benchmark tasks), it ultimately presents a research direction, not a deployable system.

The agent’s behaviour is still fragile, compute-heavy, and heavily reliant on curated environments and fine-tuning.

Additionally, risk misalignment is currently unquantifiable [2]: autonomous agents can pursue unexpected or even harmful strategies when left unsupervised. Since these agents can dynamically interact with systems,

AI-to-AI interactions may produce emergent behaviours that developers cannot predict or audit. The legal and ethical implications are still poorly understood.

What’s worse, models trained on observational data often behave irrationally when turned into agents [3]. They suffer from issues like auto-suggestive delusions and predictor-policy incoherence, where the agent’s own actions distort its internal state or expectations. These issues can only be resolved by training the model on its own actions, a costly and complex process that rules out using generic LLMs like ChatGPT or Gemini in agentic roles.

Most existing agent frameworks depend on extensive scaffolding: reflection loops, planning graphs, state evaluation heuristics, and external tools like Monte Carlo Tree Search. Without these, the agents behave like click bots, lacking prioritisation, meaningful hypotheses, or judgement.

From Agents to Autonomy: the final leap or a hallucination?

The idea of AI agents independently exploring and learning from software has undeniable appeal. If an AI can navigate an app, detect regressions, and even learn from mistakes, what’s left for a human tester to do? That’s the line of thought behind one of the most persistent and misleading claims in AI test automation today: that testers will soon be fully replaced.

Replacing Testers with AI: still a myth

This absence of contextual judgement is especially problematic in domains like finance, healthcare, or public services, where misjudgements can lead to significant harm.

Even with recent advances in planning and agentic behaviour, AI-based systems remain ill-suited for exploratory testing, which depends on understanding intent. Unlike human testers, they do not revise their focus when the product or business landscape changes, for instance, when a previously minor feature becomes critical due to market changes.

Adapting to such shifts requires fine-tuning on updated, domain-specific data, which is both technically demanding and extremely costly. The compute cost of training even an old model like GPT-3, for example, is estimated between $500,000 and $4.6 million, making this approach economically impractical for many organisations.

I-MCTS architecture featuring (a) Introspective node expansion through parent/sibling analysis, (b) Hybrid reward calculation combining LLM predictions and empirical scores. The red arrows indicate the introspective feedback loop that continuously improves node quality.

Even the most advanced architectures, like those using ExACT’s Reflective Monte Carlo Tree Search (R-MCTS), still rely on elaborate scaffolding to function. These systems can optimise behaviour based on past interactions, but they don’t form hypotheses, question assumptions,

or reason about goals. Their decision-making is driven by heuristics and predefined objectives, not understanding.

AI agents also lack the ability to reprioritise. Human testers shift focus dynamically, probing known weaknesses, adapting to new risks, and switching between functional checks and usability heuristics. AI systems, by contrast, follow static objectives defined in advance. Without explicit guidance or dynamic reward shaping, they will miss emergent issues or continue down unproductive paths.

Conclusion: AI testing is real, and so are its limits

AI is already transforming parts of the testing lifecycle, from generating test cases to helping teams prioritise what to run. These capabilities are not futuristic: they are in production use, delivering real value today. At the same time, we must be honest about what AI cannot yet do, especially when it comes to adaptive reasoning, contextual judgement, and exploratory testing.

Some emerging ideas, like agentic testing or test log summarisation, show promise but remain fragile or unproven at scale. Others, like the dream of fully autonomous testing systems, are, at best, speculative. The risks of overreliance on such promises are high: wasted effort, distrust, and missed bugs.

That’s why critical thinking is so essential right now. Understanding what’s real, what’s emerging, and what’s still just hype allows teams to invest wisely — not just in AI, but in the humans who guide, evaluate, and improve its use. The future of AI in testing isn’t about replacement. It’s about partnership: helping testers focus on what only they can do, while machines take on what they do best.