Introduction

An API (Application Programming Interface) is a set of protocols, routines, and tools for building software and applications. It acts as an intermediary between different software systems, allowing them to communicate and exchange data with each other. API defines the way software components should interact.

APIs are important because they provide a number of benefits to software developers, businesses, and users. They:

- Enable integration: APIs allow different systems to communicate and exchange data, making it easier to integrate different technologies and create seamless experiences for users.

- Increase efficiency: By using APIs, developers can save time and effort when building applications, as they can leverage existing functionality and data from other systems.

- Foster innovation: APIs open up new possibilities for innovation by providing access to a wealth of data and functionality from other systems, allowing developers to create new and unique applications.

- Improve security: APIs provide a secure and controlled way for systems to communicate, reducing the risk of security vulnerabilities and data breaches.

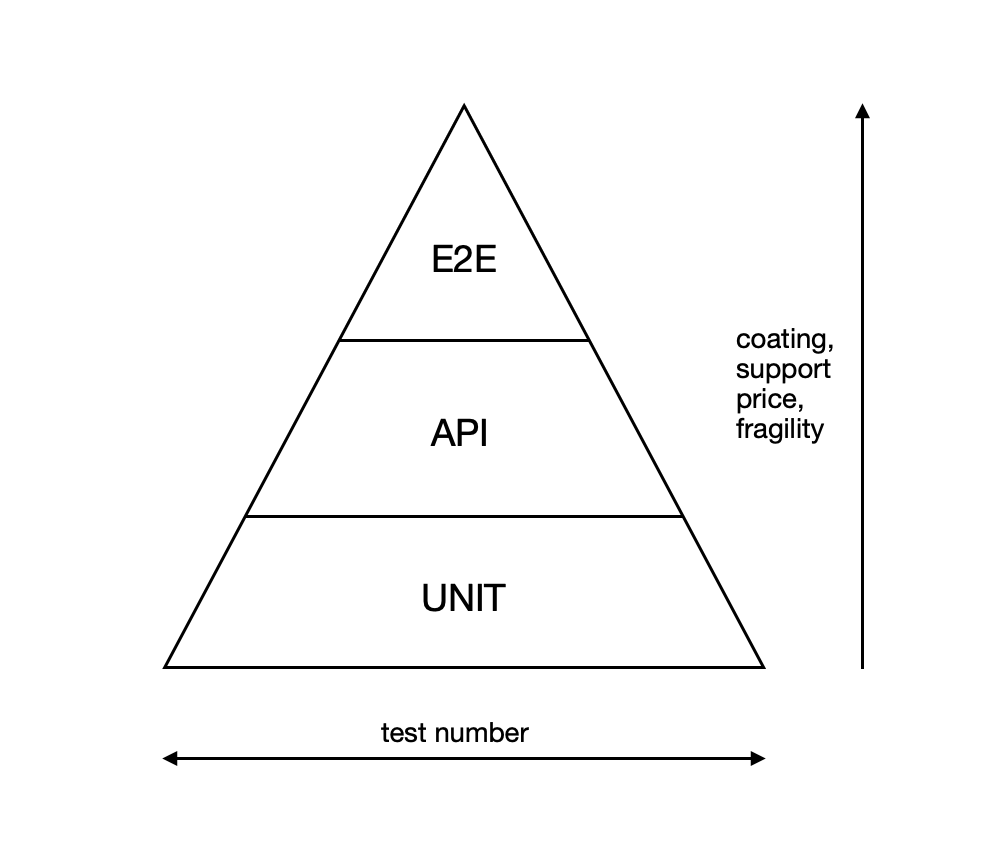

API in testing pyramid

In software testing, the testing pyramid is a visual representation of the different types of testing. The testing pyramid shows that unit tests should form the largest part of the testing effort, followed by integration tests and a smaller number of end-to-end tests.

APIs usually fall under the category of integration tests, these tests validate the communication between different software components and ensure that they work correctly together.

If the API is not tested properly, there may be issues in the communication between the different systems, leading to unexpected behavior, errors, and even security vulnerabilities. Additionally, APIs are often used by multiple clients, making them a critical component of the system that needs to be tested.

API testing is possible using API contacts, which we will talk about next.

Contract API testing

API contract testing is a technique for testing the behavior of an API to ensure that it adheres to the agreed-upon contract between the API provider and its clients. It helps to validate the API's behavior and catch any breaking changes before they affect clients. Some of the key features of API contract testing include:

- Validation of API behavior: API contract testing validates the behavior of the API to ensure that it works as expected and adheres to the agreed-upon contract.

- Detection of breaking changes: API contract testing helps to detect any breaking changes in the API before they affect clients, reducing the risk of compatibility issues and unexpected behavior.

- Improved collaboration: API contract testing helps to improve collaboration between API providers and clients by providing a shared understanding of the API's behavior.

- Automated testing: API contract testing can be automated, making it easier to perform on a regular basis and reducing the risk of human error.

- Increased confidence: API contract testing helps to increase confidence in the API by providing a repeatable and reliable method for testing its behavior.

Testing process

This article explores our way of automatic tests generation (and running) for our contracts.

We have a decent OpenAPI spec with all the endpoints and possible parameters it expects and all the possible outputs it gives: https://github.com/qase-tms/specs/blob/master/api.yaml .

This OpenAPI spec is our contract.

Simply put, the contract provides developers the API usage recipes.

With our spec, there might be the following samples:

Request:

curl --request GET \

--url https://api.qase.io/v1/attachment/XXXXX \

--header 'Token: XXXXX' \

--header 'accept: application/json'

The spec says the response will match the following pattern:

{

"status": true,

"result": {

"hash": "string",

"file": "string",

"mime": "string",

"size": 0,

"extension": "string",

"full_path": "string"

}

}

And the response from the server is this:

{

"status": true,

"result": {

"hash": "ad65a70dclk6f6507e83bf69a92864bf0ae814ce6",

"file": "Screen Recording 2022-12-01 at 17.59.45.mov",

"mime": "video/quicktime",

"size": 15770822,

"extension": "mov",

"full_path": "https://qase-app-prod…”

}

}

All is good so far, what could possibly go wrong?

We once witnessed the following situation:

According to our spec, the following request:

curl --request GET \

--url https://api.qase.io/v1/suite/DEMO/6 \

--header 'Token: 964165568f1dea4cba682c206602850553de247b' \

--header 'accept: application/json'

would result in a response matching this pattern:

{

"status": true,

"result": {

"id": 0,

"title": "string",

"description": "string",

"preconditions": "string",

"position": 0,

"cases_count": 0,

"parent_id": 0,

"created_at": "2021-12-30T19:23:59+00:00",

"updated_at": "2021-12-30T19:23:59+00:00"

}

}

However, the server replied with something unexpected:

{

"status": true,

"result": {

"id": 6,

"title": "Test suite",

"description": null,

"preconditions": null,

"position": 1,

"cases_count": 4,

"parent_id": null,

"created": "2022-03-31 13:57:56",

"updated": "2022-03-31 13:57:56",

"created_at": "2022-03-31T13:57:56+03:00",

"updated_at": "2022-03-31T13:57:56+03:00"

}

}

As you see, the response stopped matching the pattern from the spec:

- created_at and updated_ad fields appeared,

- created and updated date format changed.

The problem we were seeing appeared because a developer changed the backend code but forgot to change the specification.

Things were much worse sometimes:

- in one scenario the spec was expecting a field to have an array value, but the server started responding with an object

- the spec was describing a request field as optional, but the server expected it as mandatory

The situation would be even more complicated if our spec had multiple versions: developers would need to pay even more attention to keeping it always intact and updated.

Human factor is one of the most common causes for error.

We decided to try out automatic contracts testing to help us with this category of problems.

This approach implies generating automatic tests for the whole spec with its code examples too.

Here’s how it works:

- We generate postman JSON from the spec

- We fill postman JSON collection with semantically correct data

- We run the postman tests in our CI pipeline with newman

Generating Postman JSON

Postman allows for easy API testing, but it only accepts JSON.

We use Portman which converts OpenAPI spec to Postman JSON format:

portman --url https://raw.githubusercontent.com/qase-tms/specs/master/api.yaml -t true -o ./specs/api.json

Resulting JSON contains syntactically correct mock data. However, its semantic correctness can’t be guaranteed.

Filling the JSON collection with proper data

To make sure our JSON collection data matches what the server wants, the following actions are required:

- Postman mock data to be replaced with semantically correct data

- Fields order to be changed to match what the server expects

This piece of the code demonstrates what we do:

jsonContent.item[5].item[0].request.url.path = ['plan', '{{code_project}}'];

jsonContent.item[5].item[1].request.url.path = ['plan', '{{code_project}}'];

jsonContent.item[5].item[2].request.url.path = ['plan', '{{code_project}}', '{{id_plan}}'];

jsonContent.item[5].item[3].request.url.path = ['plan', '{{code_project}}', '{{id_plan}}'];

jsonContent.item[5].item[4].request.url.path = ['plan', '{{code_project}}', '{{id_plan}}'];

jsonContent.item[5].item[1].request.body.raw = {

title: 'Automation Test Plan',

description: 'description',

cases: ['{{id_case}}']

};

jsonContent.item[5].item[4].request.body.raw = {

title: 'Automation Test Plan patched',

cases: ['{{id_case}}']

};

jsonContent.item[5].item[0].event[0].script.exec = [

baseTest,

...jsonContent.item[5].item[0].event[0].script.exec

];

jsonContent.item[5].item[1].event[0].script.exec = [

baseTest,

' pm.test(\'Write `id` plan to env\', function () {',

' var responseJson = JSON.parse(responseBody)',

' var temp_plan = responseJson.result.id',

' pm.environment.set(\'id_plan\', temp_plan)',

'})',

...jsonContent.item[5].item[1].event[0].script.exec

];

We also add additional functions to tear down the results, and to maintain tests semantical integrity.

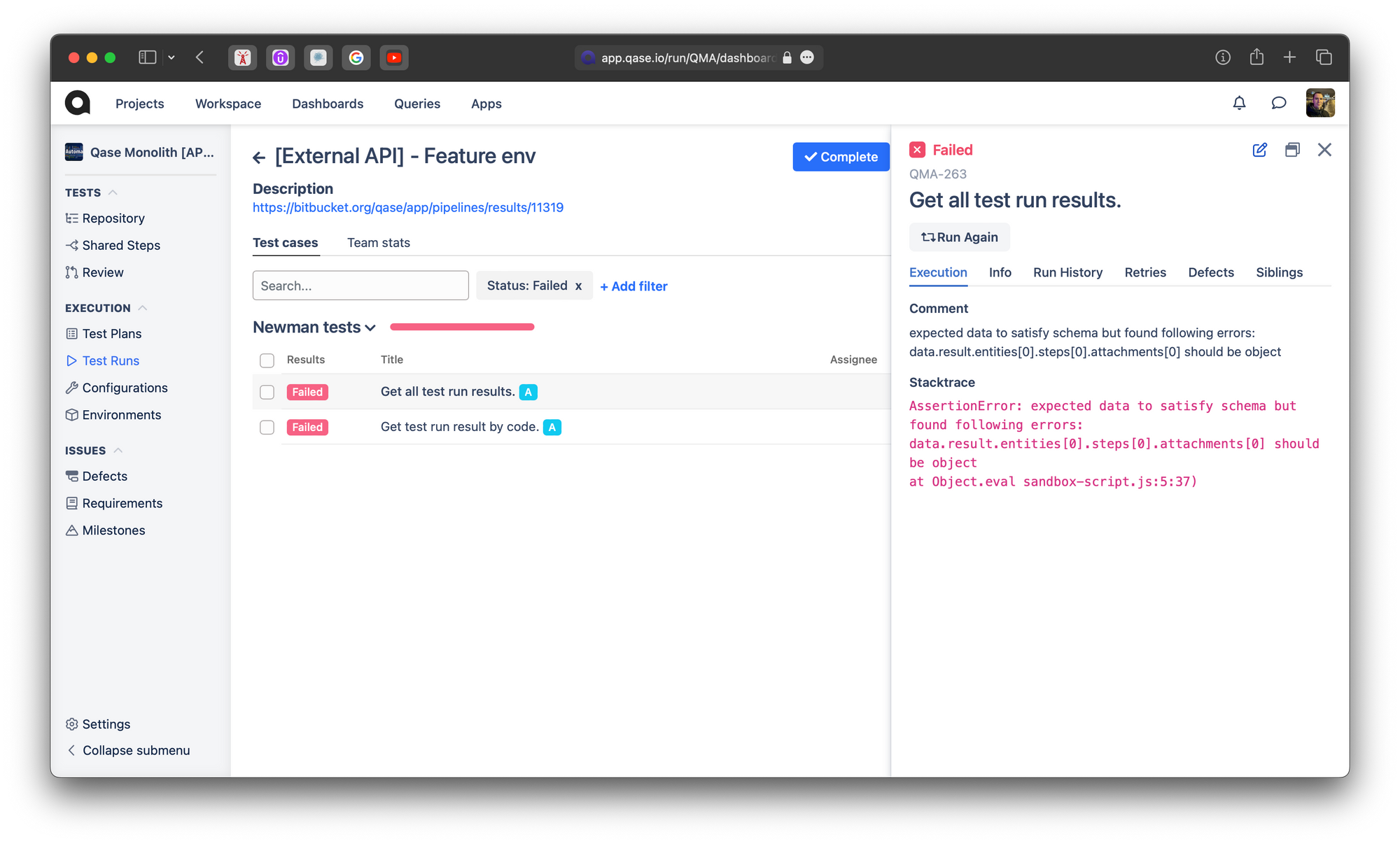

Running the resulting tests in our CI pipeline

We then run the resulting tests in our CI pipeline with Newman:

newman run ./specs/api.json -e external_api/env/stage_environment.json -r qase --reporter-qase-logging \

--reporter-qase-runName '[External API] - Stage env' --reporter-qase-runDescription 'Common API smoke Test Run' \

--reporter-qase-rootSuiteTitle 'Newman tests'

The approach we chose allows us to diminish the human factor. Whenever the server changes, our automatic contract tests are run and the spec is validated against the server. If anything breaks, we almost instantly get the feedback:

We decided to make tests failure block the CI to be sure we don’t push malfunctioning code or spec to production.

This approach also saves us significant time on manual spec testing.

Summary

In conclusion, API testing is a crucial aspect of software development, as it helps to ensure the correct functionality, reliability, and security of the API. By performing thorough API testing, developers can catch any issues early in the development process.