Automation should never be done just for the sake of it. In engineering, every decision should be guided by value, and test automation is no exception. Before automating, we need to determine whether it brings measurable benefits — and at least estimate this value upfront. To do that, we’ll go through a series of key questions that will help us make an informed decision.

How do you determine if you should automate tests? How do you calculate their usefulness?

To determine if you need automated tests, consider the following factors:

- Complexity: If your application has multiple interdependent components, a large number of user interactions, or intricate business logic, manual testing can become inefficient and error-prone. For example, applications with dynamic workflows (e.g., finance or healthcare systems), complex data processing (e.g., machine learning pipelines), or extensive integrations (e.g., multi-service SaaS platforms) are prone to regressions that are difficult to catch manually. Automated tests can help ensure consistent validation of these critical areas.

- Frequency of changes: If your software evolves rapidly with frequent updates, ensuring quality becomes increasingly difficult with manual regression testing alone. Every new feature or fix expands the scope of tests needed to maintain stability. While maintaining automated tests does require effort, the alternative — continuously growing a manual test suite — quickly becomes impractical, delaying releases and therefore negatively impacting our goal for frequent releases. Automation simply ensures that frequent changes remain possible without compromising quality.

- Time and resource constraints: Automated tests execute much faster than manual tests, enabling fast feedback and reducing delays in development. Automation is a continuous investment to keep testing time low — if the cost of delays caused by slow manual testing outweighs the effort needed to write and maintain automated tests, then automation becomes a necessary solution.

- Project duration: If your project has a strictly limited life time, such as a marketing landing page or a one-off event platform, investing in automated tests may not be worthwhile since the system won’t be maintained long enough for automation investment to pay off. However, if there’s any chance that the project will evolve beyond its initial scope — like an MVP that could turn into a long-term product — skipping automation entirely may lead to costly testing debt later. In such cases, consider a minimal, strategic level of automation to cover critical functionality without over-investing.

- Cost: Automated tests require ongoing investment in development and maintenance, but their value depends on how they reduce testing effort, speed up releases, and prevent costly defects. The key is to weigh these benefits against the cost of implementation.

To calculate the usefulness of implementing automated tests, consider the following:

- Saved time: calculate the time that will be saved by automating tests compared to manual execution. For example, you can calculate how much time you spend each month on conducting regression testing. After that, evaluate how long you plan to continue developing the product and how much time it may take to implement automated tests for the tested functionality. And then compare whether it is beneficial in your case or not.

- Importance of fast releases: evaluate how important it is for you to get testing results quickly. If this is important for you, calculate how much time is spent on manual regression testing in the context of the application release cycle. Automated testing will allow for much more frequent and faster releases.

- Money savings: calculate the savings that will be achieved by reducing the need for manual testing and potential errors. I can't suggest how to calculate it precisely since this point is quite individual and depends on the conditions you are in. But I would suggest considering how expensive it is for you to miss errors in production in terms of reputation and how expensive it is for you to release less frequently and not as quickly in terms of missed profits.

How to choose tools and programming languages

Choosing the right tools and programming language for automated testing largely depends on your situation, needs and requirements. I can give you some general recommendations that will help you make the right choice:

- Pay attention to the initial conditions. If you already have a product in some form and it's written in JavaScript + Php, don't bring Java into the project for autotests. Because you can save some money for implementing and maintaining infrastructure, and also share expertise in test writing to the development team. If you have chosen JavaScript for autotests, the choice of framework also becomes simpler, because not all frameworks support this language.

- Evaluate the capabilities of the tool. Consider the capabilities of the tools you are evaluating, such as support for web, mobile, or desktop applications, integration with your testing management and CI/CD systems, ease of use, and community support. If you have specific conditions, start by choosing a tool that will satisfy them, and then select the programming language based on the chosen tool.

- You can also pay attention to the cost of the tool, and evaluate whether it fits within your budget. But I would recommend using open source solutions and not burdening yourself with additional problems.

Why are architecture and design patterns important in software testing?

Automated tests are code, and like any codebase, they accumulate technical debt if not structured and maintained properly. Poorly designed test suites can slow down development, increase maintenance effort, and make it harder to introduce new features. Ward Cunningham introduced the concept of Technical Debt, describing how deficiencies in internal quality make future modifications harder, much like financial debt accumulates interest over time. This idea applies equally to automated tests — short-term workarounds or unstructured test suites can lead to long-term inefficiencies. To understand the broader implications of technical debt, check out Martin Fowler’s article on Technical Debt.

A well-structured test suite isn’t just about writing tests — it’s about ensuring they remain effective, scalable, and maintainable as your application evolves. Applying good architecture and design patterns in test automation brings several key benefits:

- Maintainability: Poorly structured test suites quickly become fragile and difficult to update, leading to increased maintenance costs and inefficiencies. A well-architected automation framework ensures that when changes occur — whether in the application or testing requirements — modifications can be made with minimal effort. This prevents test suites from becoming liabilities rather than assets.

- Reusability: Well-designed tests are modular and allow for reusing test components across different areas of the application. Instead of duplicating test logic, a structured approach — such as Page Object Models, API test libraries, or shared test utilities — ensures that core functionality is tested efficiently and consistently.

- Scalability: As an application grows, its test suite needs to scale alongside it. Good test architecture ensures that adding new tests doesn’t introduce bottlenecks or excessive maintenance overhead. Patterns such as test data management strategies, layered test frameworks, and parallel execution techniques help automated tests remain performant and adaptable as complexity increases.

Consistency: A structured test suite improves readability, execution reliability, and team collaboration. When tests follow consistent patterns — whether in naming conventions, structure, or setup/teardown procedures — it reduces confusion and helps teams onboard new members faster. This consistency also minimizes the risk of flaky or redundant tests, ensuring that automation remains a reliable tool.

By investing time and effort into creating well-designed tests, you can save time and resources in the long run while also improving the overall quality of your software.

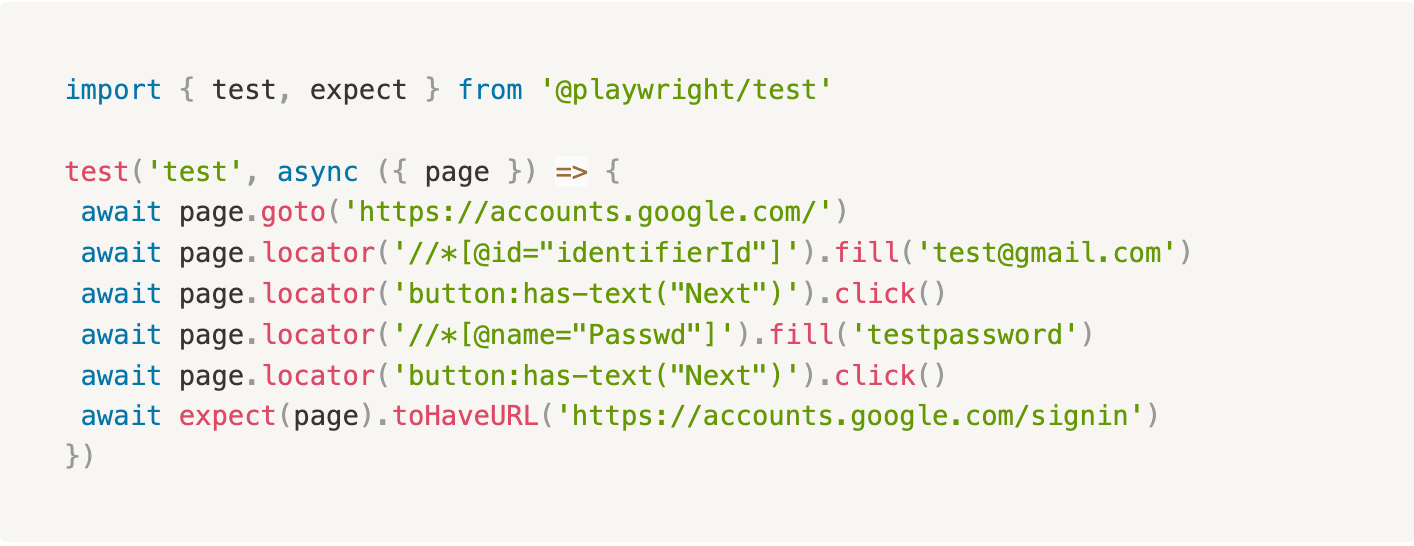

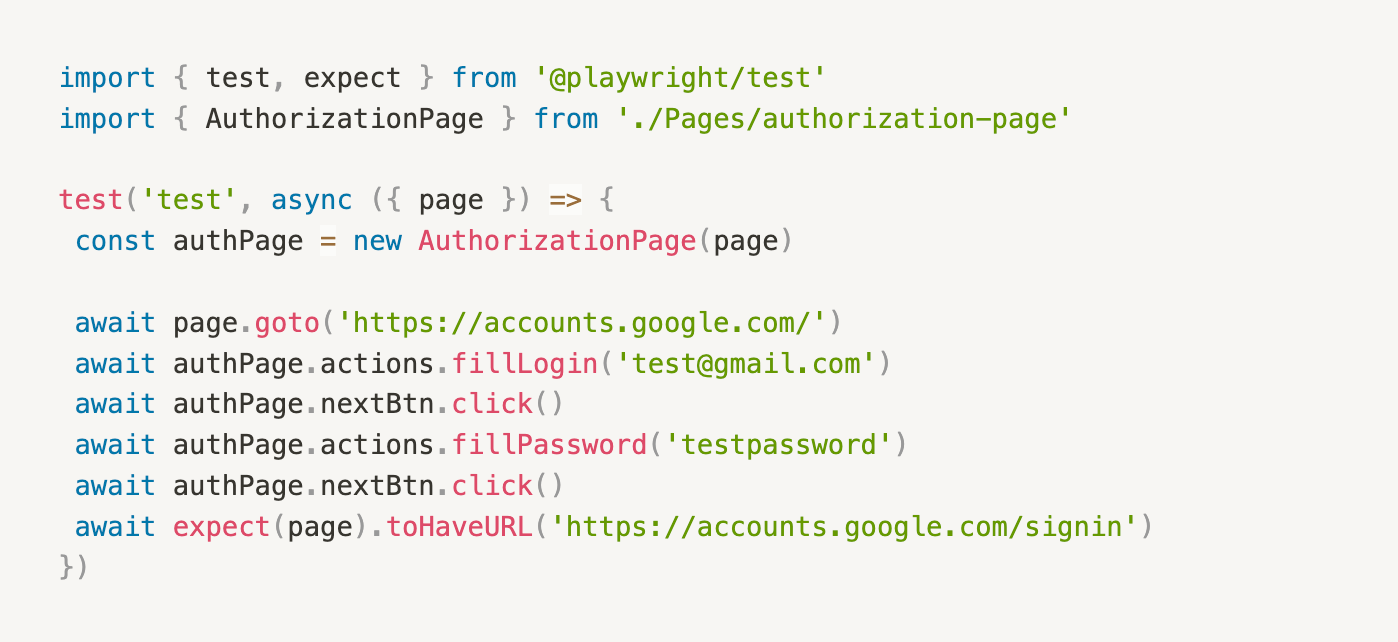

Let's stop here and go into more detail. Let's say we are beginner AQA and we are trying to write our first code. Let it be automation of logging into Google using Playwright.

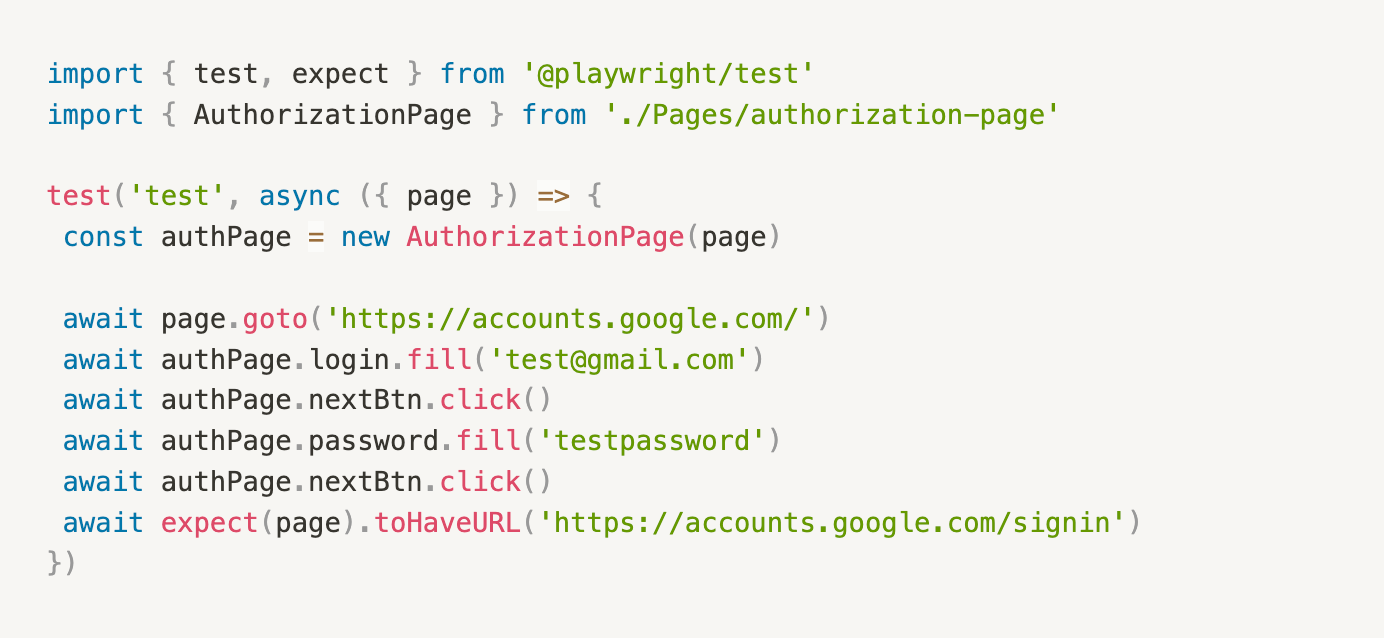

This is a small script that's pretty easy to maintain. But if we continue to write automation tests for the login form, there will be more tests and some of them will use the same fields. This means that a lot of time will be spent maintaining such tests in the event of even minor changes to the form layout. How can we avoid this? By using the POM pattern and creating a separate class that will contain all the locators for the login page. This way, in the event of layout changes, it will not be necessary to change a dozen tests - it will be enough to change the locator in one place.

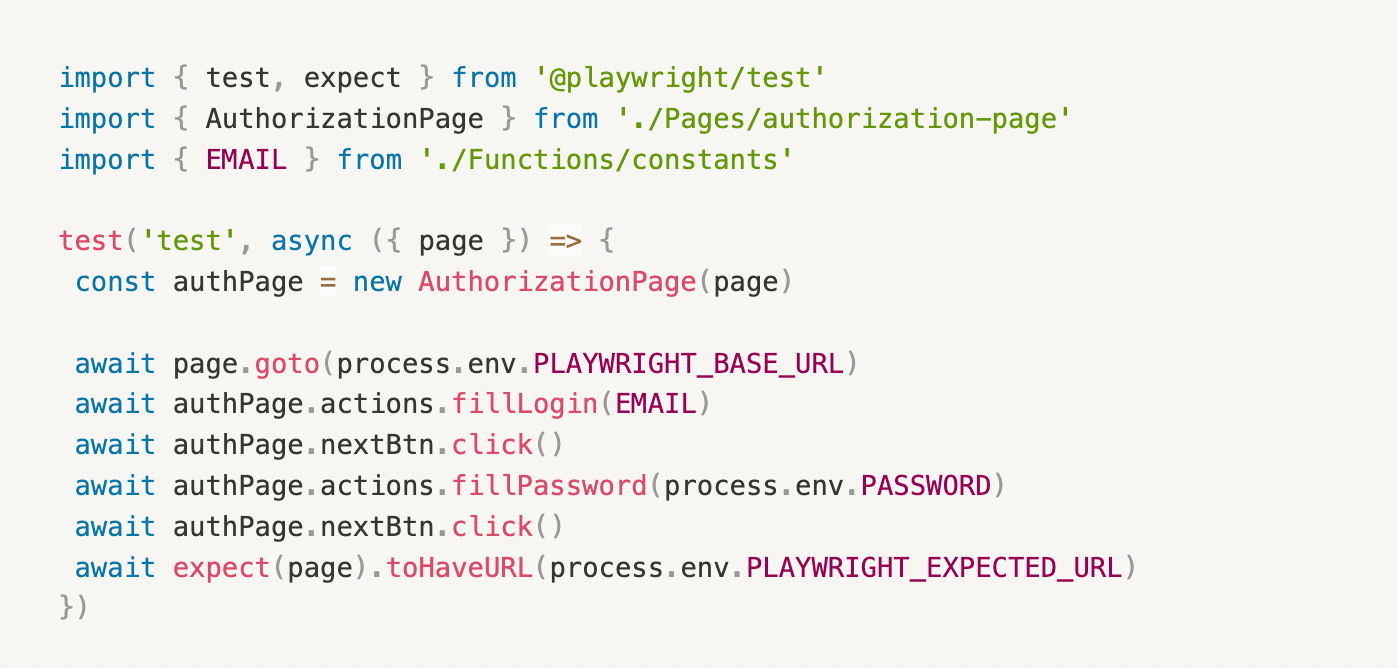

But we have another problem - not only can the layout change, but the way we interact with elements can also change. In such cases, we use actions. Let's take the fill function as an example, although actions are more often used to describe more complex things. For example, when several clicks are needed to perform an action.

What if the URL of the login page changes? Or what if we want to change the test user's credentials? Not to mention that storing credentials in the code is not a good idea. Here, constants and env variables come to the rescue, which we do not store in the code.

That is why architecture and design patterns for automated testing are crucial. They help to eliminate many problems before they even arise and become a concern.

Why parallelization matters in test automation

One of the key advantages of automated testing is speed, and parallel execution is essential for achieving efficient test runs. Without parallelization, test suites take longer to complete, delaying feedback and slowing down development cycles. However, improper implementation can lead to flaky tests, race conditions, and unreliable results, negating the benefits of automation.

To ensure reliable parallel execution, consider the following:

- Thread safety. Tests running in parallel should not interfere with each other. Shared resources, such as databases, files, or global states, can lead to inconsistent results if not properly isolated.

- Data independence. Ensure that test data is unique per execution thread, avoiding conflicts in stateful environments. Techniques like isolated test environments, session management, and containerization can help.

- Framework-specific parallelization. Many modern test frameworks, such as Playwright, Cypress, and TestNG, offer built-in parallel execution support. For example, in Playwright, parallel execution is implemented like this, allowing tests to run concurrently while ensuring isolation. Using these features while designing tests for concurrency improves reliability and execution speed.

When implemented properly, parallelization reduces execution time, enabling faster feedback loops and more efficient CI/CD pipelines.

What additional testing tools do we need and how can they help?

Automated testing often requires additional tools to simulate real-world scenarios, handle dependencies, and improve test efficiency. Some tests, for example, integration testing, cannot be fully executed without additional tools. Below are some examples where specialized tools enhance automated testing.

Payment Systems

Testing payment flows is essential in many applications, but using real payment methods is impractical and risky. Instead, test cards provided by payment gateway providers (e.g., PayPal, Stripe, Adyen) allow for safe, automated verification of different payment scenarios, such as valid and invalid card numbers, expired cards and insufficient balance, or declined transactions and fraud prevention flows. By using these test cards, you can validate payment logic without real financial transactions, ensuring that various cases — both successful and failed payments — are handled correctly.

Email Testing (Mail Servers)

Many applications rely on email-based flows (e.g., user registration, password recovery, notifications). Instead of manually checking emails, you can automate email interactions in two primary ways:

- Using a real email provider (manual or UI-driven testing):

- Create test accounts with email providers (e.g., Gmail, Outlook).

- Automate interactions by parsing emails via locators in the web UI.

- Extract verification links and trigger test actions.

- Using a dedicated test mail server (API-driven testing)

- Deploy an SMTP testing tool such as MailHog or MailCatcher.

- Redirect test environment emails to the test mail server.

- Retrieve email contents via API, extracting necessary data in the code.

The second approach is usually better because it reduces dependency on third-party email providers, making tests more stable and less prone to failures caused by external services. It also speeds up execution since tests can retrieve email contents via an API instead of relying on slow and error-prone UI interactions. Additionally, this method scales better, as it eliminates the need for multiple real email accounts, simplifying test setup and maintenance.

How to handle data cleansing in automated testing

Data cleansing is very important for test reliability and independence. Without proper data management, tests can become interdependent, leading to flaky results, inconsistent failures, and debugging difficulties. Cleansing test data ensures that each test runs in a predictable state, preventing unexpected interference between test runs.

There are several approaches to managing test data:

- Cleaning up after each test – Tests can delete or reset created entities via API calls, database scripts, or rollback transactions. This ensures that subsequent test runs start with a fresh and always correct dataset.

- Using isolated test data – Instead of cleaning up after tests, each test can use unique, non-overlapping data (e.g., generating test-specific user accounts or IDs). While this avoids interference, it requires careful data design and can be complex for large test suites.

- Database snapshots and rollbacks – Some teams use database snapshots or sandbox environments to revert test data between executions, ensuring a clean state without manual deletions.

The best approach depends on test architecture and system complexity. In most cases, automating data resets or cleanup is the most scalable solution.

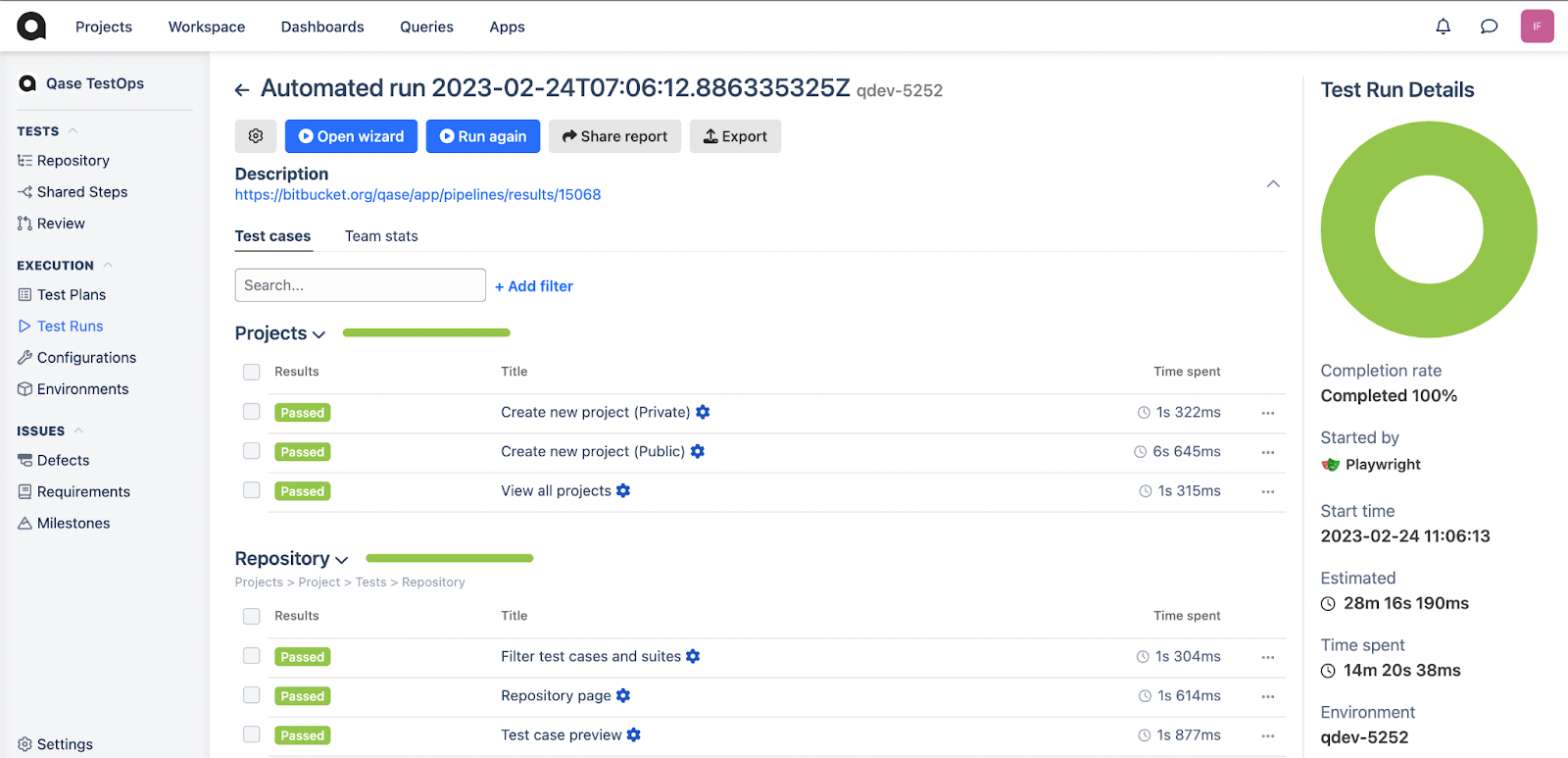

Choosing reporters and a Test Management System (TMS)

Once you’ve set up automated tests, the next challenge is analyzing test results efficiently. Understanding which tests failed, when they failed, how long they took to execute, and overall test trends is essential for improving software quality. This is where reporters and a Test Management System (TMS) come into play.

- Reporters: collect and format test execution data, including pass/fail rates, execution time, and error messages. Many testing frameworks include built-in reporters, while others allow integration with external tools.

- Test Management Systems (TMS): centralize test data, helping teams track test coverage, analyze trends, and integrate results into broader QA workflows. A TMS also enables collaboration between testers, developers, and product teams.

Why Qase?

Qase provides built-in reporters for all the popular testing frameworks and languages, a user-friendly interface, and extensive customization options, making test result analysis efficient and flexible. Its integrations with popular CI/CD pipelines ensure seamless test tracking and reporting.

Conclusion

Automated testing is a powerful tool when implemented correctly. It speeds up testing, improves reliability, and enhances overall software quality. However, to maximize its benefits, teams must make informed decisions at every stage:

- Justify automation – Automation should be driven by value, not done for its own sake. Consider factors like complexity, change frequency, project duration, and cost before investing.

- Follow good test architecture – Applying design patterns and scalable test structures prevents technical debt and ensures maintainability.

- Optimize test execution – Parallelization accelerates test runs but requires proper implementation to avoid flaky results.

- Leverage auxiliary tools – Testing integrations like payments and email flows requires specialized tools to simulate real-world conditions.

- Manage test data carefully – Proper data cleansing and isolation ensure reliable, independent test executions.

- Analyze results effectively – A TMS and structured reporting help track test outcomes, spot trends, and improve efficiency.

Poorly implemented automation can erode trust within teams, making it more of a liability than an asset. But by following best practices, teams can ensure automation remains a long-term enabler of quality rather than a source of frustration.