Test automation strategy design falls under the Quality System umbrella. It is an evolving concept that looks different for each company based on many factors — company size, code complexity, microservices, and more. While designing a test automation strategy should be an iterative process based on company needs, there are some ways to build flexibility for future potential needs while still addressing existing ones.

In my career in Quality, I have supported both large corporate teams and small startup teams, which have varying needs when it comes to designing test automation strategies. While individual companies and engineering organizations have varying requirements, the industry has seen a rise in microservices, DevOps, and continuous deployment. With this shift in the industry, more companies are looking for ways to build their test automation frameworks in a way that reduces inefficiencies and increases the speed of their deployment cycles.

Adopting a dual-tool approach to unit testing and integration testing can improve efficiency, flexibility, and code clarity for your team. This article will explore the benefits of a dual-tool approach to unit and integration testing through the lens of Mocha and Jest with the assistance of a test management system (TMS).

Getting started with unit tests and Jest

Unit tests are typically the largest set of automated tests in your codebase, and each test is focused on an isolated individual unit. These tests are typically small and can be run quickly. They provide a fast feedback cycle for engineers while writing code and can be run continuously through the deployment pipeline.

Jest is a Javascript unit test framework built on top of Jasmine. It works with projects using a multitude of different languages, including Typescript, Node, Angular, and React. One of the biggest draws to Jest is its zero config setup — Jest works out of the box and does not rely on third-party integrations for most of its functionality. Jest offers the following features:

- Parallel test execution: optimizes the speed of your test runs

- Snapshot testing: typically used for UI testing and allows you to take a “snapshot” of an object to compare with over time for built-in regression testing; snapshots can live in the test files or be embedded inline

- Auto-mocking: a feature that automatically mocks dependencies to simplify the test scripting process

- Integrated code coverage reporting: easily generate code coverage by adding a single flag to your CLI command, and it can collect code coverage for your entire project, even those without tests added

Beyond the features that Jest offers, it is a widely used framework in the JavaScript ecosystem. There is a large community behind Jest that offers support for challenging scenarios and provides extensive documentation as well as regular updates to the framework.

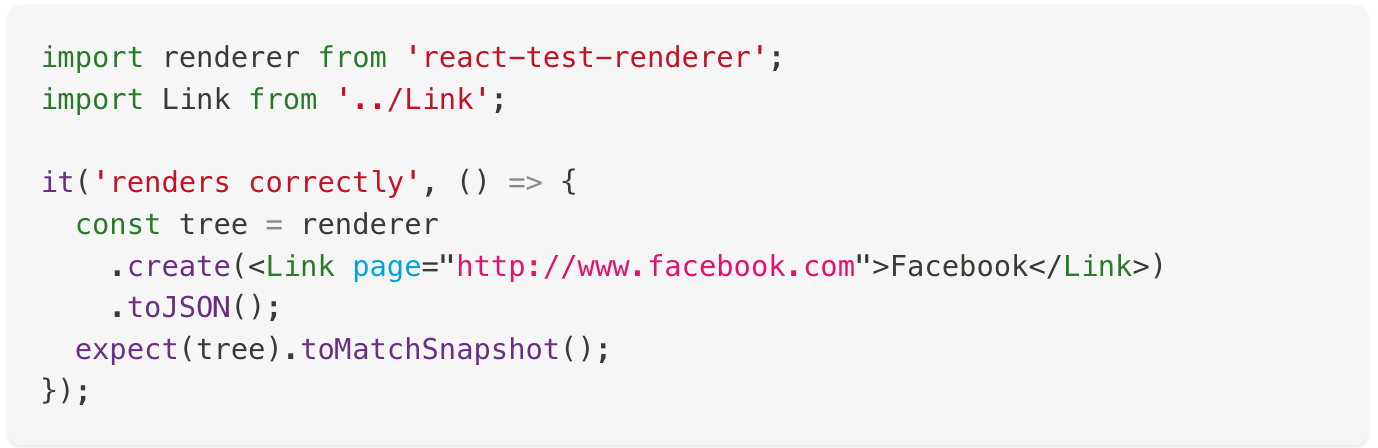

Jest’s documentation covers how to use their varied features, including feature-specific guides. For instance, you can see this example of a snapshot test in their Snapshot Testing Guide, along with additional examples and a best practice guide.

Snapshot testing is one of Jest’s standout features, so let's walk through an example use case. It’s important to remember that the snapshot feature is one of the many assertions that Jest tests can have, and different user scenarios will require different types of assertions to build the most effective test suite for your needs.

Imagine you are building a user profile component and want to ensure that its underlying DOM structure is preserved against future version changes. Because the Jest snapshot feature verifies the DOM structure, we will use snapshot testing for this scenario.

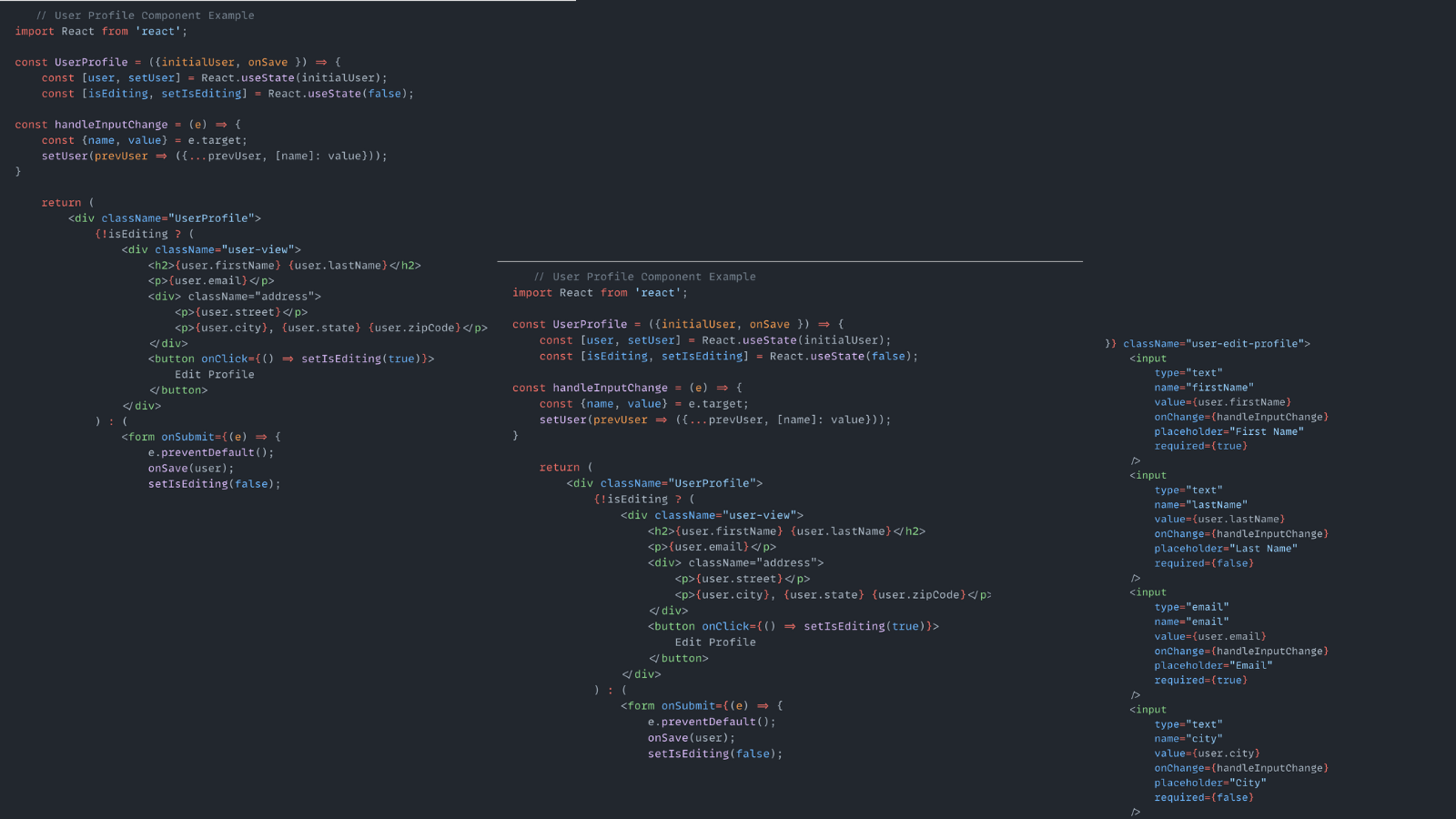

Basic user profile component example:

As you can see, this is a basic user component written in React with minimal state management and fields for first and last name, email, and address. Now, let’s write a couple of snapshot tests to verify that the view and edit modes render correctly.

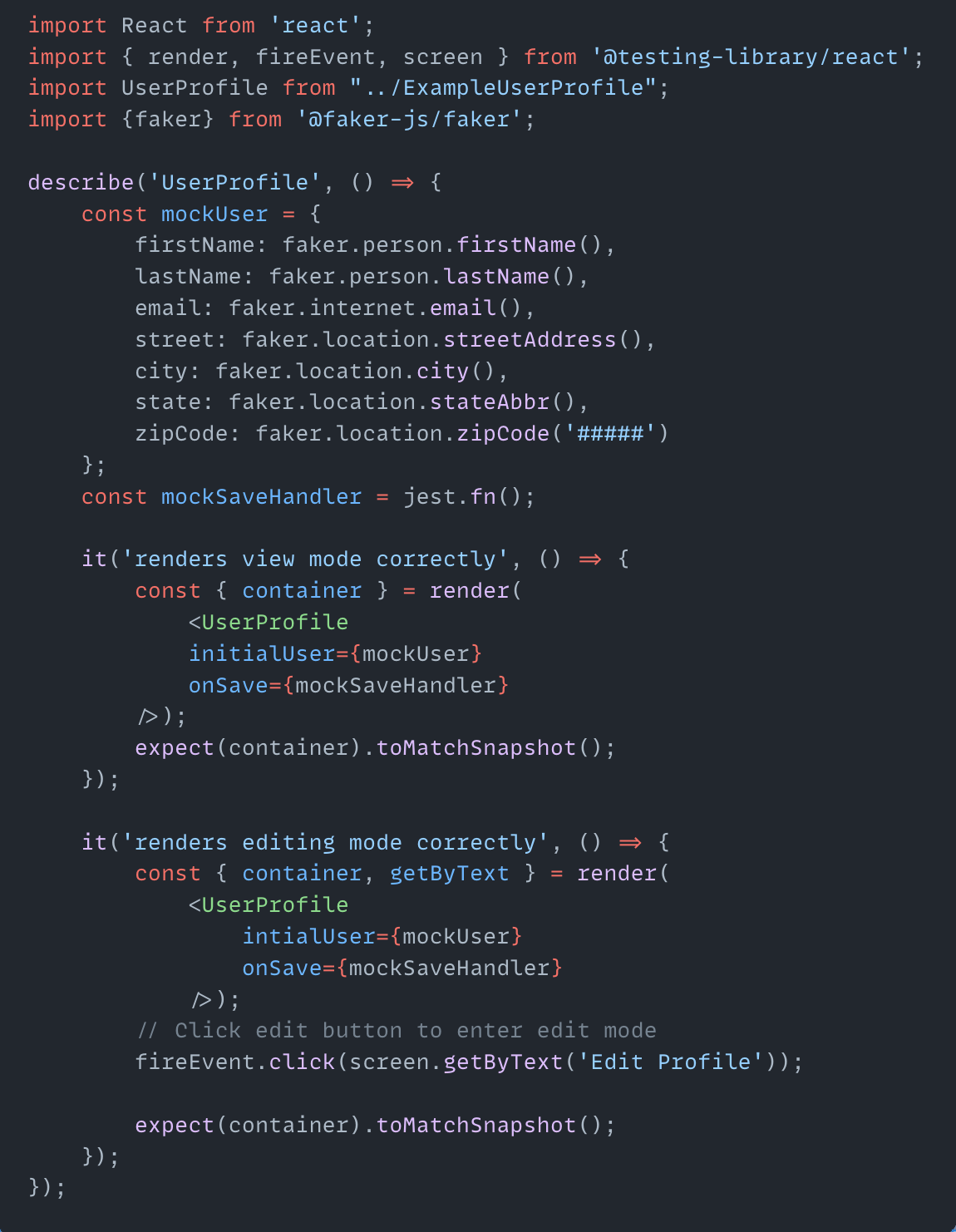

For our snapshot test, I used Faker and React Testing Library to generate randomized mock data. The test setup creates a mock user and sets up a mock save handler. The, we have the two test functions that will compare the results of each test run to the saved snapshot.

Moving onto integration tests and Mocha

While unit testing has become the norm since its adoption, integration testing is becoming increasingly important in the modern software system. Many software products are now made up of a complex system of interconnected parts, and end-to-end test frameworks are not the most efficient for testing all of these connections.

Mocha is an open-source Javascript test framework that runs on both Node.js and in the browser. Because Mocha uses Node.js, you run those tests directly on the server when a browser environment is not necessary for the validation. Mocha has the following features:

- Parallel test execution: optimizes the speed of your test runs

- Public API: helpful when creating mock APIs to test code that is isolated from external services

- Flexible configuration: you can define how and when tests are executed, enabling easier testing for complex scenarios, also works well with a multitude of assertion libraries

- Testing asynchronous code: handles async operations using features like async/await

- Testing lifecycle hooks: provides hooks to enable setup and teardown for complex scenarios

Mocha is also a widely used test automation framework with a large community behind it, providing regular documentation, updates, and community support.

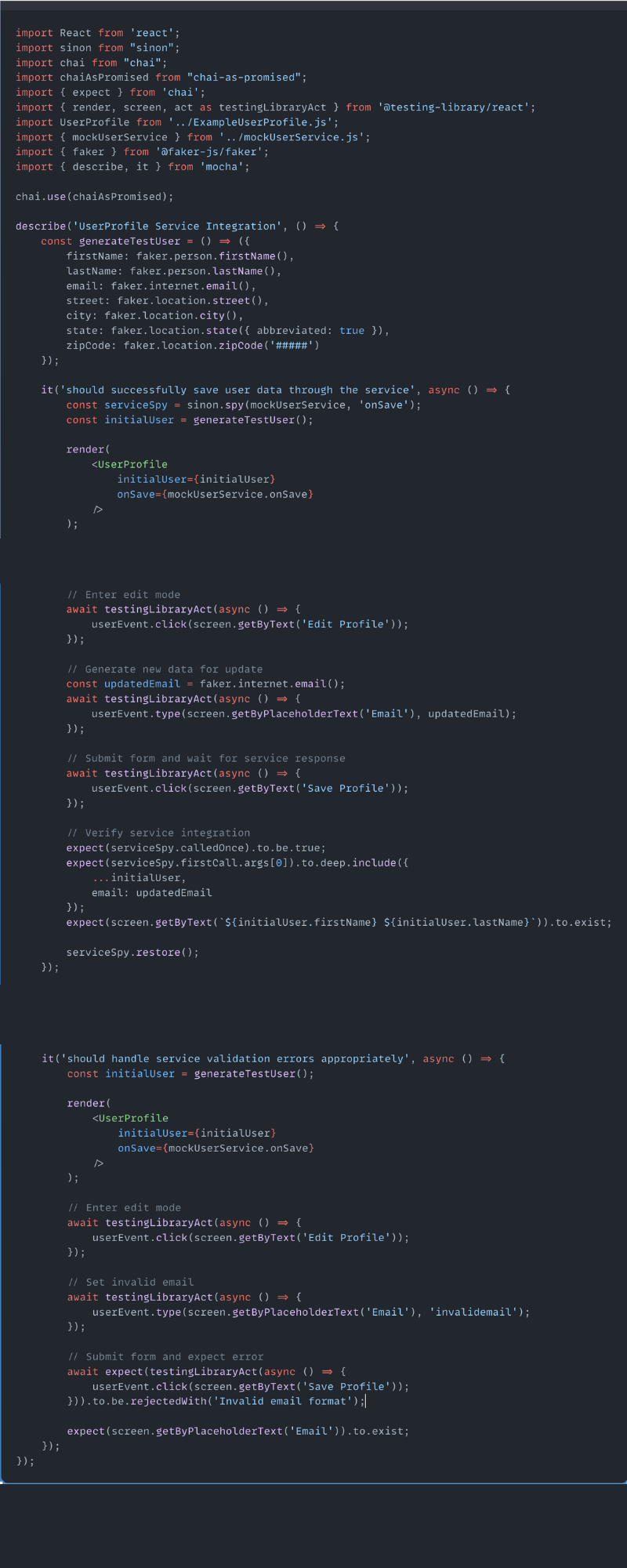

For our integration test example, we will work on the same user profile component I shared above. For this test, we will verify that we can save the user profile changes and handle errors for an invalid email scenario.

These are a bit more complex to write, and we will need to import some additional libraries for our test setup. While Mocha is our main testing framework and our test runner, you will see that I also imported several other libraries and frameworks — Chai for an assertion library, React Testing Library for rendering the React components, Sinon for spying on my mock service, and Faker for generating randomized mock data. I also created a small mock service, as these are example files and are not connected to any database.

As you can see from this example, we first have the initial setup with the different libraries and frameworks. The “describe” block is how Mocha describes or sets up the structure for the functions in the rest of the test file. The “it” blocks underneath the “describe” function are where we define the test action and expected result while using the structure in the “describe” function.

From our example, you can see the “describe” function setting up the test data generation through one of the libraries we imported – Faker. Then, two “it” blocks are nested under the “describe” function. The first is verifying that updated user data is saved through the mock service, and the second is verifying that it can handle validation errors as expected. In our example, we have a single validation error – invalid email – which gives our test file both a “positive” test (that user data saved correctly) and a “negative” test (that a user can fail to save if there are input errors).

Dual-tool approach to test automation base design

So why use two different tools when you could use just one?

Adopting a dual-tool approach can have several benefits — speed and pipeline optimization, flexibility, and code clarity – especially when combined with a test management system (TMS).

Benefits of a dual-tool approach

Jest and Mocha play to different strengths, making them a powerful combination. Jest’s comprehensive features, such as snapshot testing and automatic mocking, shine in scenarios where detailed testing is crucial. However, these capabilities can introduce overhead in deployment pipelines, particularly with large-scale test suites.

Mocha’s leaner architecture, on the other hand, is better suited for integration tests, which often involve more intricate systems. While Mocha requires more setup and configuration, this flexibility can be advantageous for tailored testing needs.

Both tools support parallel test execution, an essential feature for optimizing test pipelines. By assigning specific tools to distinct test types, you can refine pipeline efficiency through strategies like separate pipeline stages, resource caching, and targeted resource allocation. For instance, running unit tests in an early pipeline stage can quickly identify failures and prevent unnecessary execution of integration tests, which are typically more time-intensive.

This dual-tool approach also scales effectively with evolving project and organizational demands. Leveraging each tool’s strengths enables a more adaptable and strategic testing framework. This also facilitates more focused and strategic testing strategies — varied testing ratios, assertion types, and testing patterns. Over time, this flexibility minimizes the need for major overhauls as testing needs grow more complex.

Integrating this dual-tool approach with a TMS only furthers these potential optimizations. A test management platform would reduce the overhead of managing multiple automation frameworks by removing excess complexity, outdated information, and difficulty reporting metrics and data.

Intentionally separating unit tests and integration tests by tools drives organizational and code clarity. While debates persist on how to define these test types, creating a clear distinction promotes alignment and intentional decision-making. As engineers write code, they will have to decide what to test at what level. This also enables better source code organization and makes it easier to pull more specific quality metrics to monitor the health of the codebase.

Strategy implementation considerations

Although the dual-tool approach provides many positive opportunities, implementing it will require intentional strategy at both the macro and micro levels.

A key challenge is achieving alignment across teams. Clear definitions of unit and integration tests and guidelines for deciding when and how to use each are essential to ensure consistency and clarity in the codebase. Integrating this approach with a TMS would alleviate some of these challenges by providing a centralized place for:

- Setting definitions for unit and integration tests and storing them in the TMS for easy replication

- Establishing test tagging and naming conventions

- Creating easily adaptable test cases and scenarios

Maintaining a single source of truth for your test automation framework strategy will drive stronger adoption and keep everyone on the same page.

Pipeline optimization may also be necessary to see the full benefits of this approach. Adjustments such as tailored pipeline stages, caching strategies, and resource allocation can enhance efficiency and support the separation of unit and integration tests. This is another area where integrating with a TMS can be beneficial.

When a TMS is integrated with the CI/CD pipeline, the platform can become the main source of reporting and test metrics. With a TMS, you can track test automation data like failure patterns, flakiness, stack traces, and logs. Plus, you can link bugs to specific test cases.

This dual-tool strategy serves as a framework for the entire engineering organization — though the actual split between unit tests vs. integration tests will vary based on the need of that area of the codebase. Testers and quality professionals play a critical role here as well because they can help teams identify which tests to write at which level.

Build a tailored test automation strategy with a dual-tool approach

Test automation framework strategy design — besides being a mouthful — is a multifactorial strategy problem. Strategy design is inherently specific and unique to the needs and opportunities of the space it is being designed for. For test automation framework strategy design, some of these factors can include problem/risk assessment, tool analysis and selection, existing code coverage, budget, staffing, and feature development roadmap.

With these considerations in mind, this dual-tool approach to building out unit tests and integration tests can be an effective solution in many scenarios. Adopting an approach that enables flexibility, organizational and code clarity, and pipeline optimizations through using both Mocha and Jest builds a strong base to integrate into your larger test automation framework strategy.